July 05, 2019 / 11 minutes / #AWS #ECS #EC2 #Docker #Containers

Bulletproof ECS Cluster - Best practices for creating secure, stable and cost-effective clusters on AWS

Running ECS Cluster on AWS may be troublesome. After bunch of outages and war stories, I present you a list of lessons learned the hard way to make your cluster secure and anti-fragile on a budget.

Host in private, expose as via ALB/ELB

The Load Balancer should be your gateway to the cluster. When running a web service, make sure you've running your cluster in a private subnet and your containers cannot be accessed directly from the internet. Ideally, your internal container should expose a random, ephemeral port which is bound to a target group. Make sure also that traffic is only allowed from the Load Balancer's Security Group.

Use Spot Instances

Spot Instances are a great way to save money on Compute Heavy/Worker clusters or for dev/staging environments. If you don't know what spot instances are, here's a quote from AWS Docs:

Spot Instance is an unused EC2 instance that is available for less than the On-Demand price.

In reality, by using them you can save up to 80% of costs! But, there's a catch. Spot Instances are based on bid pricing model and AWS can kill them spontaneously so, you should be aware that your instances might be taken down anytime with one minute warning.

Use Parameter Store for environment variables

In container's task definition you can specify environment variables directly, however, it's not the best way to pass config variables to the container. It's not secure, it couples infrastructure with env variables, changing them requires you to change task definition and perform a new deployment. Instead, environment variables should be rather injected during start from AWS SSM (Simple Systems Manager) Parameter Store.

It's a secure, encrypted, auditable, scalable and managed central source of truth which might be used not only by ECS but also by Lambda, EC2, CloudFormation and many more. Moreover, it integrates with other AWS services nicely, so once a variable changes, you might react on that event. More on that on official AWS blog.

Update ECS Agent on EC2 instance start

> service MY-SERVICE was unable to place a task because no container instance met all of its requirements.

That's the error we saw one day when investigating an outage. "What's wrong?" - we kept asking that question to ourselves. After digging for a while, we found a root cause.

Because we were running our cluster on Spot instances, AWS decided to terminate them. Luckily, our Autoscaling Group re-spawned them to maintain a desired amount of running instances but there was one problem. The AMI had outdated ECS agent installed and it had no capability to pull SSM parameters from the store, so the task refused to start repeatedly.

Solution? Include these two simple lines in your EC2 Auto Scaling Group user data ensuring ECS agent is up-to-date on launched instances:

sudo yum update -y ecs-init

# Depending on AMI

### On ECS-optimized Amazon Linux 2 AMI

sudo systemctl restart docker

### On ECS-optimized Amazon Linux AMI

sudo service docker restart && sudo start ecs

Scale instances based on reservations, not usage

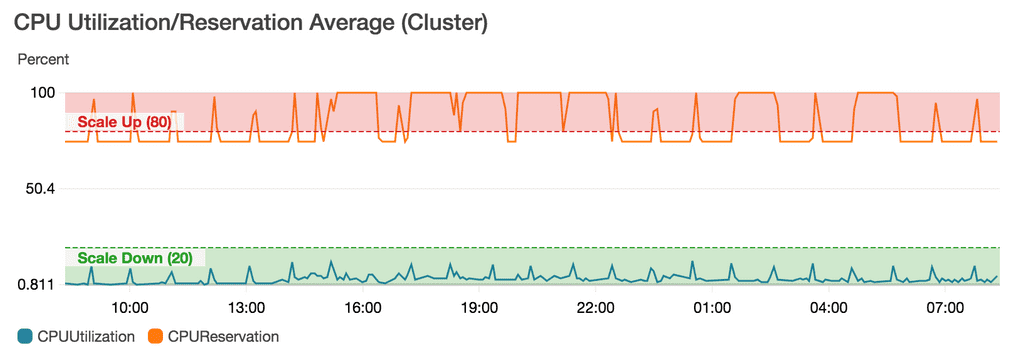

Remember service MY-SERVICE was unable to place a task... error? The other case for that might be just the fact that your cluster has not enough resources to place it. It's a very common scenario when CPU reservations go up to 80% but usage stays at the very low level preventing from scale-up operation.

Typical scaling operation problem. Usage very low but reservations quite high.

That's why you want to scale you EC2 machines running your cluster based on Memory or CPU reservations instead of usage. Your services should scale based on usage and as the amount of them grows, the amount of reserved resources also, which will effectively trigger AutoScaling group operation on underlying EC2s.

Adjust healthcheck grace period and scaling cooldowns

Knowing approximately how long does it take for your container to start is a crucial information to adjust healthcheck grace period correctly. If you configure it too aggressively, your instances which are still launching might be prematurely marked as unhealthy and killed by scheduler before they even manage to start. This will trigger a never-ending cycle of instances being provisioned and killed. In most cases, increasing this period should solve your problem.

On the other hand, making grace period too long might lead to slow deployments and make your cluster not scale well. My advice is to start from 60 seconds and check whether that amount is enough.

Use Target Track Scaling instead of Step Scaling

Unlike step scaling where you set up a two thresholds for scale up or down operations, in target track scaling service automatically calculates the amount of services/instances to keep the metric at, or close to specified value. This is not only easier to set up as requires much less configuration but also more effective from my experience.

Or maybe even try custom scaling strategy with Events inside Lambda

If autoscaling options provided by AWS are not sufficient for your needs, you can always include custom scaling logic inside Lambda function which reacts to ECS Events, Metrics and Alarms. You can find more on that here.

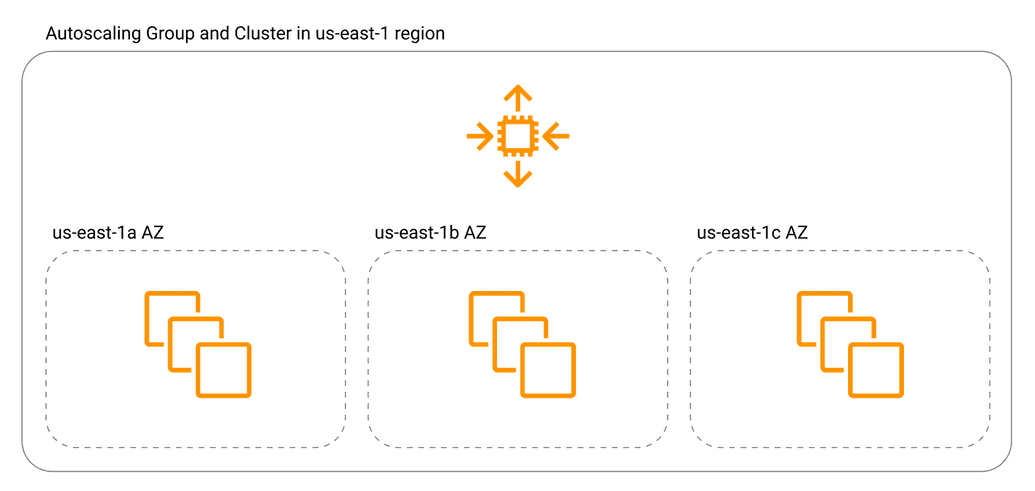

Go multi-AZ

AZ stands for Availability Zone, you can think of AZ as an independent data center. In history, we had some cases when whole AWS AZs went down so it's likely to happen in the future. However, it's quite simple to protect your cluster from similar outage - just distribute your machines in multiple AZs.

While Auto Scaling group manages the availability of the cluster across multiple AZs to give you resiliency and dependability, the ECS scheduler manages task distribution across these underlying machines in different zones effectively making your cluster highly available and independent of single AZ failures.

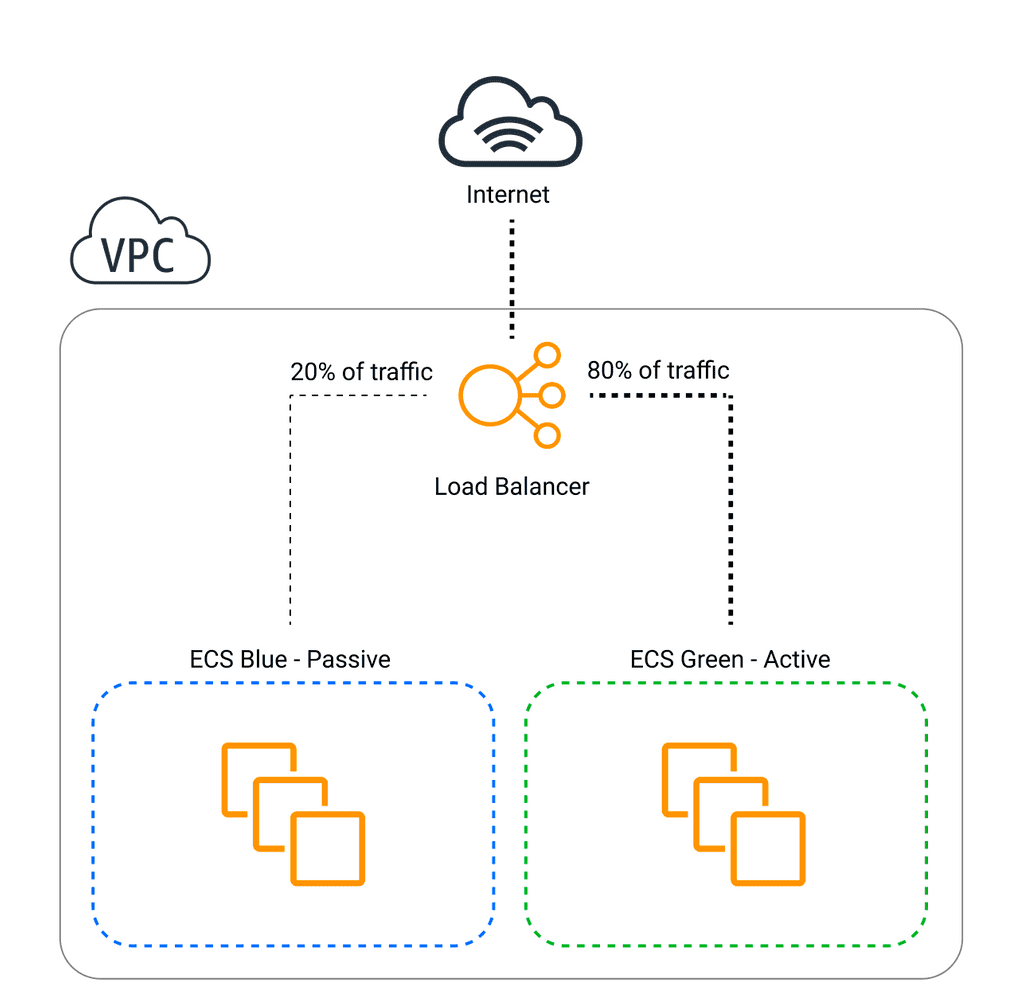

Configure blue-green deployment

One challenge of achieving full agility in engineering is allowing engineers to make deployments a breeze, rather than a task requiring downtime and whole team keeping a finger on a pulse. That's where Blue-Green Deployment comes to play.

When performing a deployment, exact same copy of current environment/service is spawned and the traffic gradually becomes routed to the new target, increasing by few percent each minute eventually reaching 100% on a new unit. Once all the traffic goes to the new target and it's stable, green unit becomes decommissioned and the new version aka "blue" becomes the new standard aka "green". When new version is malfunctioning by for example responding with too big amount of 400s, the procedure is stopped and traffic gets rolled back to green fully.

This method allows your dev team to push new version of the code few times a day without worrying about downtime and pushing faulty code to the production. You can read more about it on official AWS blog.

Use IaaC

Tools like Terraform, Cloudformation or Cloud Development Kit (CDK) are a perfect way provision your cluster in reproducible way. This is especially helpful when your client requests from you an additional environment or you need to run dynamic, per-branch staging environments. Moreover, you can find many plug'n'play, really well made modules according to these best practices will which create such cluster with a single click.

Use CloudWatch logs

To make troubleshooting of your applications much easier, always push containers STDOUT logs to CloudWatch. You can do that simply by adding following lines to containerDefinitions:

"logConfiguration": {

"logDriver": "awslogs",

"options": {

"awslogs-group": "awslogs",

"awslogs-region": "us-west-2",

"awslogs-stream-prefix": "awslogs-example"

}

}

Don't forget about attaching necessary IAM permissions: logs:CreateLogGroup, logs:CreateLogStream, logs:PutLogEvents, logs:DescribeLogStreams

As soon as they are in CloudWatch, you can very easily find relevant information using Insights.

Also, in your application code remember to change log line endings from \n to \r to save multiline strings as one entry. This will not only save your money, but also make them greppable.

-- Written by Rafal Wilinski. Founder of Dynobase - Professional GUI Client for DynamoDB